- Republic of Gamers Forum

- GPUs & PSUs

- NVIDIA Graphics Cards

- ROG Strix RTX 2080 Overclocking Guide

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ROG Strix RTX 2080 Overclocking Guide

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

10-29-2018

12:23 PM

- last edited on

03-05-2024

08:25 PM

by

![]() ROGBot

ROGBot

ROG Strix 2080 Overview

Everything about Turing is colossal. The 2080 die measures 545 mm², making it 15% larger than its Pascal relative. This silicon expanse is bursting with NVIDIA's revolutionary Tensor Cores, generating thermal output that demands better cooling than its Pascal predecessor. DirectCU III meets these requirements by extending card dimensions to 2.7 slots.

Most of that real estate is taken up by the heatsink, which connects to a precision-machined cooling plate that efficiently siphons heat away from the GPU die. The aluminum fin stack has 20% more surface area than former generations and is augmented by a sleek anodized brace that takes the brunt of the weight to reduce strain on the PCB.

On top, the shroud surrounds all-new Axial Tech IP5X-certified fans sporting a smaller hub and longer blades with barrier rings to improve air dispersion through the entire heatsink array.

To improve chassis airflow, the card also has FanConnect II headers that permit connection of two DC or PWM chassis fans. Speeds can be controlled by GPU or CPU temperatures, ensuring optimal cooling whether the system is running games or crunching through application workloads.

The 5050 RGB header also makes a return, paired with onboard light bar accents and an illuminated logo on the card's backplate. These can all be controlled with Aura software, allowing colors and effects to be synchronised with a range of compatible gear.

Along the front edge of the card there's a small SMT switch that can be toggled to select a Performance or Quiet BIOS. The latter keeps fans dormant until 55°C, and then engages an RPM curve tailored to minimise noise.

The RTX series finally does away with SLI connectors and replaces them with the NVLINK technology championed on Quadro. That's because SLI data transported over an HB Bridge is limited to a bandwidth of 2GB/s, which may create performance bottlenecks at ultra-high resolutions. Geared for next-gen displays, NVLINK on the 2080 boasts a bandwidth of 25GB/s using NVIDIA's proprietary High-Speed Signalling interconnect (NVHS).

Placed near the NVLINK connector, there's another SMT button that allows RGB lighting to be switched off for a stealthier appearance. Please note that this setting is only retained if the Aura Graphics software is installed on the system.

Precision Engineering

All Strix cards are manufactured using Auto-Extreme technology, a fully automated assembly process used to precisely install and solder all SMT and through-hole components in a single pass. This exposes them to less heat and delivers optimal contact between each device and the PCB, ensuring all cards meet the high standards users expect from the Strix series.

Connectivity

Taking inspiration from the ROG motherboard line, a new matte black I/O shield can be found at the rear. Connection options include two HDMI 2.0b, and two VESA Display Stream Compression (DSC 1.2) and 8K/60Hz compatible ports. More strikingly, there's a USB Type-C connector dubbed VirtualLink that's capable of supplying up to 27 Watts of power and transmitting data at 10Gbps. The port is designed to reduce VR I/O port requirements by combining up to four HBR3 DisplayPort lanes through a single cable, making Turing a sound investment for next generation headsets.

Power Delivery

To ensure stability under all loads, power delivery is handled by a 10-phase VRM array that features 70A power stages, SAP II chokes, and solid polymer capacitors. The entire array is fed by an improved power plane that draws power from two eight-pin power connectors. Nearby, there are six read points that make it easy to measure all major voltage domains with a digital multi-meter.

GPU Tweak II takes on Turing

To overclock the Strix, we need to install GPU Tweak II. Featuring an intuitive UI, this opens control of all the elements needed to extract the most from the card. This section breaks down all the key features and their function (advanced users can skip this section).

GPU Tweak II Download (You will need version 1.8.7.0 as a minimum for Turing support)

Gaming Mode: Sets thee GPU clock to 1860MHz. This is the default preset.

Silent Mode: Sets the GPU clock to 1830MHz, with a reduced power target of 90%.

OC Mode: This mode sets the GPU clock to 1890MHz whilst increasing the power target to 125%. This is the most aggressive profile.

My Profile: A quick selection option for your saved profiles.

0dB Fan: When paired with the Strix or other compatible cards, this feature engages passive cooling until a temperature of 55°C. This mode is only accessible when Silent Mode is enabled.

Professional Mode: Professional Mode is designed for advanced users, providing control of all the card's faculties through an intuitive panel.

GPU Boost Clock: Sets an offset for the GPU boost clock. When the card is under load, the maximum operating frequency is determined by GPU core temperature and power draw. At stock settings, the RTX 2080 Strix will boost to around 1950MHz. GPU Boost 4.0 allows us to define all available voltage points. This feature is accessible by clicking User Define

Memory Clock: This controls the offset for the memory clock. Typically, Micron GDDR6 can achieve a 600MHz to 1100MHz overclock, taking it beyond the 2GHz barrier. While that sounds impressive, memory bandwidth has little impact on game performance. Users that have a 4K display or favor downsampling may see some benefits, though. Memory-related instability will normally manifest in an application or as a system hang. To simplify debugging, it's best to overclock memory after achieving a stable GPU core overclock.

Fan Speed: With GPU Tweak II, it's now possible to adjust left and right fan speeds independently to the central fan, providing better control over cooling and acoustics. Although one can create a custom fan curve, the default fan profiles deliver the perfect balance between noise and performance. I found no need to modify this throughout testing.

GPU Voltage: On Turing, GPU core voltage increases are expressed as a percent scale that references multiple points. By default, these upper voltage points are locked. Once the voltage offset is increased, the upper points are unlocked, providing additional headroom. The maximum voltage on Turing is 1.068v.

Power Target: Increasing this setting allows the GPU to draw additional power. Turing is especially power constrained, so even if you do not plan on overclocking it's worthwhile setting this slider to maximum. Note that it the % scale means very little when comparing with other GPUs because the base TDP varies. The Strix can draw a maximum of 245W when the target is set to 125%.

FPS Target: No real introduction needed. It's recommended to set the target to one or two frames below that of the refresh rate of your display. For non-G-Sync users, this is recommended in order to avoid screen tearing.

GPU Temp Target: This sets the maximum GPU temperature. When the applied value is reached, GPU voltage and frequency will be reduced so that the core temperature threshold is not breached. The Strix cooler keeps temps between 50-70C under load (recorded with an ambient temp of 21C). The Strix 2080's huge cooling capacity allows the power target to be used as a priority.

Tools: This section can be used to install the Aura Graphics utility, which provides full control of the card's RGB lighting. The utility can be run independently or users can install the regular Aura software to sync lighting with compatible hardware.

Game Booster: When performance is key, having anything unnecessary running in the background is frowned upon. This option automatically adjusts the visual appearance of Windows for best performance and disables unnecessary services.

Xsplit Gamecaster: Allows both streaming and recording, an overlay, and GPU Tweak II profile switching. The free version is limited to 720p and 30FPS recording.

Monitor: Allows monitoring of vital stats. By clicking the expand button, items can be rearranged or discarded. A logging feature that records stats is also available.

OSD: GPU Tweak II now features an on-screen display that enables statistics to be tracked in real time. Preferred stats, text color, and size can be selected from the monitor tab. This can be particularly useful when establishing an overclock because it allows tracking of the GPU Boost Clock and power limits. When starting out, the metrics ticked in the image below are of use:

- Labels:

-

GPUs

-

Graphics Cards

-

NVIDIA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

10-29-2018 12:23 PM

1. Depending on power consumption and GPU load, most 2080 samples can achieve a core frequency between 2050MHz and 2100MHz.

2. Depending on the quality of the IC, memory frequencies between 1900MHz and 2200MHz are possible. Despite the range, performance gains are limited because most games are not bottlenecked by memory bandwidth. The only scenarios in which memory performance matters is when driving UHD resolutions in tandem with heavy anti-aliasing (although, framerates become a limiting factor here, too).

3. The GPU Boost Clock offset merely sets the maximum target frequency. The actual frequency under load is controlled by GPU Boost 4.0 and will scale based on voltage, power, and thermal conditions.

4. Raising the frequency at lower voltage points provides additional performance in some scenarios. This technique is sample dependent and requires a trial and error approach. Typically, the GPU spends most of its time between 1.040v and 1.050v, so the frequencies applied between these points are key.

5. Past a certain point, GPU core voltage has minimal impact on overclocking stability. Voltage increments should be applied sparingly because it affects power consumption and limits Boost clock frequency (due to TDP limits).

6. NV Scanner allows one-click overclocking. It works by conducting multiple stress tests and adjusting frequency at each voltage point. In contrast, when using the GPU Boost Clock slider, the same frequency offset is applied across the entire VF curve. The offset approach is more crude because it assumes core voltage will be sufficient at every point along the frequency curve.

7. Turing overclocking range does not seem to be effected by temperatures. Due to power limitations, even when temperatures are kept in the range of 35°C to 40°C, we see less than a 3% improvement in benchmark scores. The increased performance comes from a higher sustained boost frequency (when the GPU is not under heavy loads).

8. The default fan curve is sufficient and doesn't hinder overclocking potential.

Overclocking the Strix 2080

Before we get started, it's wise to make sure everything works as expected. Leave the GPU at stock clocks, and use games or a preferred stress test to check stability (I use Time Spy Extreme). Be sure to apply the Performance BIOS mode by using the SMT switch, and ensure that the latest WHQL certified driver is installed. With that out of the way, it's time to push the envelope...

1. Set the Power Target to 125% (maximum). This allows the GPU to draw up to 245W.

2. GPU Temp Target can be left at the default 84°C. During our testing, the Strix 2080 remained comfortably under 75°C when overclocked, which is well within thermal limits of the GPU.

3. The GPU Voltage slider can be increased to 100%. This unlocks three additional voltage points.

4. The Boost Clock slider adjusts the target GPU frequency. Any changes are applied to every point along the frequency curve. Start by applying a 50MHz offset (increase) and then test for stability. If all is well, apply another 20MHz and repeat the test. Keep increasing the clock until the GPU is not stable in your preferred stress test. The Turing architecture is very forgiving when pushed too far - most crashes result in a brief hang, followed by driver recovery closing the application ( which will take you back to the desktop). When this is experienced, reduce the Boost Clock to a stable operating frequency.

5. The Memory Clock slider adjusts the frequency of the Micron GDDR6 memory. Do not overclock this domain until a stable GPU Boost frequency is established. Stable range can vary from +600 to +1100 MHz over the default frequency. To test a memory overclock, use Time Spy Extreme, because it renders at 4K and places a lot of assets into the frame buffer, which makes it good for finding instability.

6. The default Strix fan profiles are tuned to keep noise to a minimum and GPU temperatures under 84°C. If acoustics are not a concern, you can define a custom curve for the central fan. Note that it's preferable to avoid large increases in fan speeds between small temperature increments. For example, if you set a fan speed of 50% at 65°C, and 80% at 70°C, the fan speed will modulate excessively.

Stress Test

3DMark Benchmark Download

Once initial settings are established launch Time Spy Extreme to initiate a stress test. Time Spy adequately stresses the Turing architecture because it incorporates multiple DX12 technologies. Graphics Test One will loop 20 times before completing. Anything below 97% is deemed a failure and indicates the GPU couldn’t maintain a consistent performance level for the duration of the test. This is caused by a sharp drop in clock speeds due to GPU temperatures.

Final settings

Running the Time Spy Extreme stress test shows whether the boost clock is sustainable within the power and thermal limits of the GPU. In OC Mode, the Strix boasts a boost clock of 1890MHz, landing 90MHz higher than the Founders Edition, so we're already punching above stock operating points. With the settings shown below, our Strix card was able to maintain a GPU overclock in the range of 2070-2100MHz together with a 600MHz memory overclock. Throughout the duration of the test, the GPU temp remained below 73°C.

A deeper evaluation

To evaluate overclock stability at lower voltage points along the VF curve, use a variety of your favorite games. This helps expose instability that occurs due to insufficient voltage at a given Boost Clock frequency and is suitable for testing both offset and VF curve overclocking.

Power Limit: 125%

Voltage: 100

GPU Boost Clock (MHz): +100Memory Clock (MHz): +600

Specs:

7900X @ 4.7GHz

ASUS Apex VI

32GB @ 4000MHz 16-17-17-41

ASUS RTX 2080 Strix

Thermals and Acoustics

Temperatures and noise levels were monitored with the Time Spy Extreme Stress Test looping 20 times. Rendering at 4K and taking roughly 20 minutes, the test represents a worst-case scenario, especially if your display isn't native 4K. Despite this, the Strix 2080 rarely broke 70 degrees Celsius (Quiet BIOS) and remained inaudible even though it was roughly 30cm from my seating position. Therefore, the Quiet BIOS is useful when gaming in an extremely low-noise environment. Even in Performance BIOS mode, the card is exceptionally quiet, generating a mere 43dB under load.

Benchmarks:

Final Fantasy XV

Final Fantasy XV utilizes multiple NVIDIA technologies, making it a taxing entry into any benchmark suite. These include Hairworks, VXAO, TurfEffects, and Shadowlibs. Running with our manual overclock gained the RTX 2080 a 13% advantage over the Strix 1080Ti OC. However, at 4K and maximum visual settings, neither card is able to maintain a steady 60FPS. Moving down to 1440P, the overclocked RTX 2080 manages to pump out a reasonable 85.2FPS

Deus Ex Mankind Divided

Deus Ex Mankind Divided takes us into a dystopian future, featuring an incredibly detailed and interactive hub world. Maximum settings were applied here, apart from MSAA, because the performance overhead is substantial. On the Strix 2080, a steady 2055MHz widens the gap against big Pascal.

Shadow of the Tomb Raider

Crystal Dynamics' Tomb Raider franchise is known for making good use of the latest visual tech. Shadow of the Tomb Raider is no exception to the rule, and an upcoming patch will add RTX features. While it's a shame the update isn't available yet, the game is taxing enough without it. At maximum fidelity, the overclocked 2080 can maintain an 81FPS average with SMTAA x2 enabled. This is the anti-aliasing setting recommended for a balance of performance and image quality, although, it can be down to user preference. Cranking SMAA to x4 does little to improve visuals and has a negative impact on performance.

Hitman 2016

With IO Interactive's Hitman, we see DX12 widening the gap between cards. There is also some repeatable hitching throughout the benchmark on the 1080Ti, resulting in low minimum frame rates. However, at SSAA 2X, even the overclocked RTX can't maintain a steady 60 FPS. High refresh rate gaming at 4K resolutions likely requires a 2080Ti in an SLI configuration.

FarCry 5

FarCry 5 produces solid performance, maintaining over 100FPS without dipping far below for the duration of the benchmark. In an open-world title such as this, those numbers are impressive and the experience is incredibly immersive.

Thoughts & Conclusion

Overclocking the Strix 2080 presents nothing but benefits, and the increased power consumption doesn't impede the user experience at all. With an average Boost Clock of 2050MHz, we see a 5.6% increase in performance across game tests (silent fan profile applied). Moreover, some samples will be able to achieve clock frequencies in the region of 2085MHz to 2100MHz, pushing things even further. Unfortunately, due to game title launch dates being pushed back, we're not able to test RTX features in a meaningful way. Hopefully, within the next few months, we will be able to put the card through a wider range of tests to see how it performs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

10-29-2018 12:23 PM

While that’s all you need to overclock the Strix 2080, we’ve taken the time to take a deeper look at Turing and ray tracing. If you’re interested in either subject, the sections below provide a host of useful information.

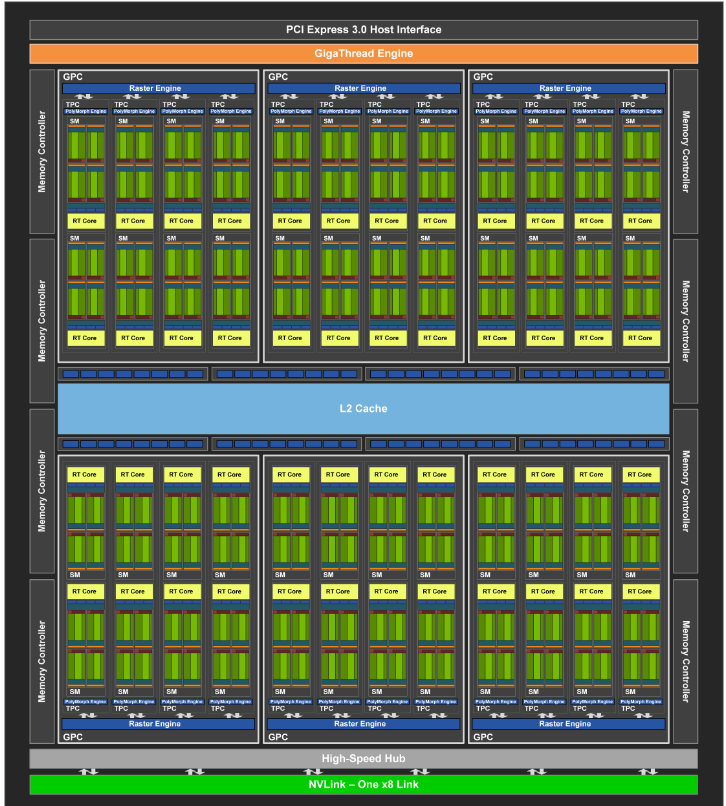

NVIDIA Turing (TU104)

The RTX 2080 (TU104) is made up of 13.6 billion TSMC 12nm FinFET transistors taking up 545mm2 of space. Despite not being the largest sibling in the Turing line up, that still topples NVIDIA's previous flagship 1080Ti (GP102) by 15%.

On closer inspection, we can see that Turing closely resembles the Volta architecture. A total of six processing clusters with 3072 combined CUDA cores do most of the work, pumping out 9.2 TFLOPs, compared with the GTX 1080’s 2560 cores delivering a throughput of 8.9 TFLOPs. For AI and Deep Learning, 384 Tensor Cores are divided over 48 Streaming Multiprocessors (SM), with two placed in each of the 23 Texture Processing Clusters (TPC). Every SM also contains an RT Core (Ray Tracing), intended to unburden the rest of the GPU from the immense computational power required to cast rays in real-time.

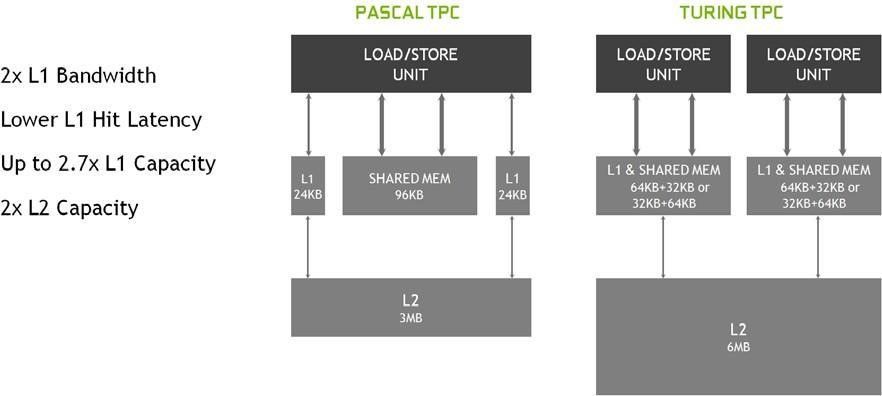

The Vbuffer gets an upgrade to 8GB of GDDR6 (from Micron), delivering higher bandwidth and a 40% reduction in signal crosstalk. This is fed by eight 32-bit memory controllers, delivering 448GB/s of bandwidth over a 256-bit bus. Despite having the same bus width as the GTX 1080, that's a 33% increase in throughput thanks to GDDR6 and the redesigned architecture. The Turing memory subsystem is now unified, allowing the L1 cache to be combined in a shared memory pool. Along with an increase in L1 and L2 cache size, this results in twice the bandwidth per Texture Processing Cluster in comparison to Pascal.

Other Improvements

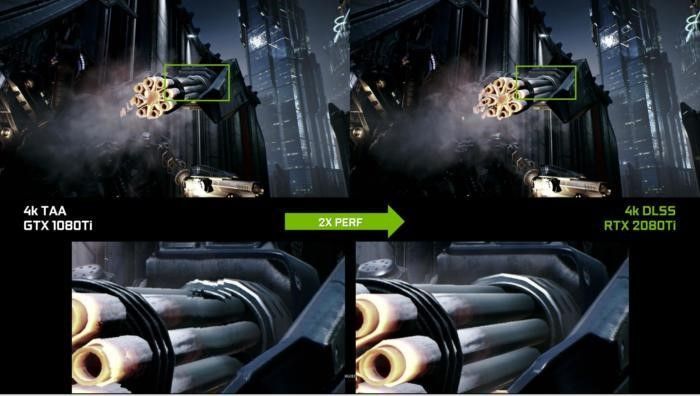

Deep Learning Super Sampling (DLSS)

Legacy super sampling renders frames at a power of two or four times the native resolution and then down samples them to fit the display. However, the downside of this approach is that it requires a considerable amount of video memory and bandwidth, generating a large performance penalty. As a viable alternative, NVIDIA developed a temporal antialiasing method (TXAA) that uses a shader-based algorithm to sample pixels from current and previous frames. This approach yields mixed results because it blends multi-sampling over the top of temporal accumulation, rendering a soft or blurred image.

On Turing, a technique referred to as DLSS (Deep Learning Super Sampling) is employed, making use of NVIDIA's new Tensor Cores to produce a high-quality output while using a lower sample count than traditional antialiasing methods. Typically, this technology is used for image processing, where it distinguishes on-screen objects simply by looking at raw pixels. That ability also permits Deep Learning to be utilized for games. NVIDIA references images containing an incredible 64x super-samples and with its neural network attempts to match them to the target resolution output frame. Because this is all done on the Tensor Cores using AI, it avoids blurring and other visual artefacts. NVIDIA is keen to get everyone on board, as developers need only send their game code to process on a DGX supercomputer that generates the data needed by the NVIDIA driver. With a growing list of supported titles, Turing’s DLSS is ripe for experimentation.

There are currently two ways for us to test DLSS, and one of them is not fit to use as a comparative. Although Final Fantasy's implementation works fine, its Temporal AA alternative doesn't and produces unwieldy stuttering, making it an unfair comparison. That leaves us with EPIC's Infiltrator Demo, which with a recent update allows us to make a fair comparison against TXAA.

With DLSS enabled, the performance numbers speak for themselves. Although there are some frame timing issues due to scene transitioning, DLSS provides a near 33% advantage over TXAA. Moreover, there wasn’t a difference in obtainable clock speed or power consumption between the two. Keep in mind that this is a singular example, so this may change in future once the technology is used outside of a canned environment.

The image quality between these modes is currently a hot topic and fundamentally troublesome to convey. DLSS is far better in motion than it is in image stills, meaning one must see the demo running to make an informed decision. Of course, there are YouTube videos available, but these are heavily compressed, resulting in discernible differences being lost. The other problem is that Infiltrator puts NVIDIA at an advantage. By being repetitive, the demo aids the learning process. This produces a more accurate result than a scene that’s rendered based on player input. Personally, I feel there is a visual trade-off when compared with TXAA, but it's marginal, so the performance advantage cannot be ignored. DLSS is set to drop soon in a growing list of available and up and coming games, so it’ll be interesting to see if performance mirrors the gains seen in this test.

Variable Rate Shading (VRS)

Turing now has full support for Microsoft's Variable Rate Shading (VRS), a new rendering method that focuses fidelity on the player's peripheral vision. We have seen similar techniques on previous generations in the form Multi-Resolution Shading, but with VRS, the granularity is far superior as developers can now use a different shading rate for every 16-pixel x 16-pixel region. Forgoing the out of view detail in a given scene means developers can focus GPU power where it matters, something that has far-reaching implications for virtual reality, where maintaining high framerates is crucial.

Turing also furthers support for DX12, with architectural changes that allow integer and floating-point calculations to be performed concurrently (asynchronous compute), resulting in more efficient parallel operation than Pascal. With its Tensor Cores handling antialiasing and post-processing workloads, these new technologies promise to reduce CPU overhead and produce higher frame rates.

RTX – Ray Tracing

Ray tracing isn't a brand-new technology if we talk about it in an all-encompassing way. Many of us have witnessed ray tracing at some point in our lives even though we are unaware of it. In the movie industry, CGI relies upon rays being cast into a scene to portray near photorealism, with rendering often taking months to complete. Transitioning this technique into games is no simple task, but this technological leap must begin somewhere, and that's what NVIDIA has promised to deliver on Turing.

RTX is ray tracing at a fundamental level, using a modest count of one or two samples per pixel to simulate anything from global illumination to detailed shadows. By comparison, CGI in a Hollywood blockbuster would cast thousands at an individual pixel, something that is currently impossible for real-time graphics. To compensate, NVIDIA is utilising the Deep Learning Tensor Cores to fill in the blanks by applying various denoising methods, whilst the RT Cores cast the rays required to bounce and refract light across the scene. Of course, it is not solely the samples per pixel that account for the heavy computational workload.

Recently, I've been speaking with Andy Eder (SharkyUK), who is a professional software developer, with over 30 years of experience in both real-time and non-real-time rendering. Knowing Andy quite well, I was eager to hear his take on RTX, and ask why ray tracing is so incredibly hardware intensive.

What is Ray Tracing?

Ray tracing has been around for many years now with the first reported algorithm originating back in the 1960's. This was a limited technique akin to what we now refer to as ray casting. It was a decade later when John Turner Whitted introduced his method for recursive ray tracing to the computer graphics fraternity. This was a breakthrough that produced results forming the basis of ray tracing as we understand it today. For each pixel, a ray was cast from the camera (viewer) into the scene. If that ray hit an object, more would be calculated depending on the surface properties. If the surface was reflective then a new ray would be generated in the reflected direction and the process would start again with each 'hit' contributing to the final pixel colour. If the surface was refractive, then another ray would be needed as it entered, again considering the material properties and potentially changing its direction. It is the directional change of the ray through the object that gives rise to the distortion effects we see when looking at things submerged through water or glass. Of course, materials like glass have reflective and refractive properties hence additional rays would need to be created to take this into account. There is also a need to determine whether the current position is being lit or in shadow. To determine the point of origin, these shadow rays are cast from that current hit point (the point of intersection) towards each light source in the scene. If it hits another entity, then the point of intersection is in shadow. This process is often referred to as direct lighting. It is this recurrent nature that affords greater realism albeit at the expense of significant computational cost.

What does this mean for games?

Ray tracing provides the basis for realistic simulation of light transport through a 3D scene. With traditional real-time rendering methods, it is difficult to generate realistic shadows and reflections without resorting to various techniques that approximate, at best. The beauty of ray tracing is that it mimics natural phenomena. The algorithm also allows pixel colour/ray computation to have a high degree of independence - thus lending itself well to running in parallel on suitable architectures, i.e. modern-day GPUs.

The basic Whitted ray tracing algorithm isn't without limitation, though. The computation requirements are an order of magnitude greater than found in rasterization. It also fails to generate a truly photorealistic image because it only represents a partial implementation of the so-called rendering equation. Photorealism only occurs when we can fully (or closely) approximate this equation - which considers all physical effects of light transport.

To generate more photorealistic results, additional ray tracing techniques were developed, such as path tracing. This is a technique that NVIDIA has already demonstrated on their RTX cards, and it is popular in offline rendering (for example, the likes of Pixar). It's one thing to use path tracing for generating movie-quality visuals, but to use it in real-time is a remarkable undertaking. Until those levels of performance arrive we must rely on assistance from elsewhere and employ novel techniques that can massively reduce the costs of generating acceptable visuals. This is where AI, deep-learning, and Tensor Cores can help.

Generating quality visuals with ray tracing requires a monumental number of rays to be traced through the scene for each pixel being rendered. Shooting a single ray per pixel (a single sample) is not enough and results in a noisy image. To improve the quality of the generated image, ray tracing algorithms typically shoot multiple rays through the same pixel (multiple samples per pixel) and then use a weighted average of those samples to determine the final pixel colour. The more samples taken per pixel, the more accurate the final image. This increase in visual fidelity comes at processing costs and, consequently cause a significant performance hit.

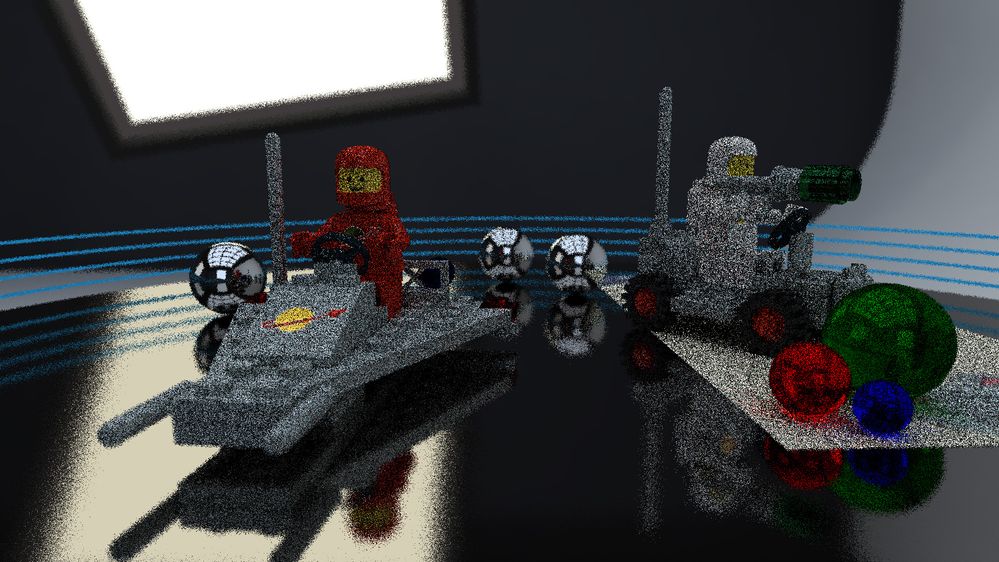

These images below are from my own real time path tracer, giving an indication about how sample rates affect visual quality and how expensive it is to generate higher-quality results. The algorithm is running in parallel across the full array of CUDA cores on a previous generation NVIDIA GTX 1080 Ti GPU and rendering at a HD resolution of 1920x1080. Please be aware that the generated metrics are for indicative purposes only.

Image 1

Samples per pixel: 1

Render time: <0.1s

In this first image, the scene is rendered using only a single sample per pixel. The result is an incredibly noisy image with lots of missing colour information, and ugly artefacts. As gamers, we would not be happy if games looked like this. However, it was fairly quick to render thanks to the low sample rate.

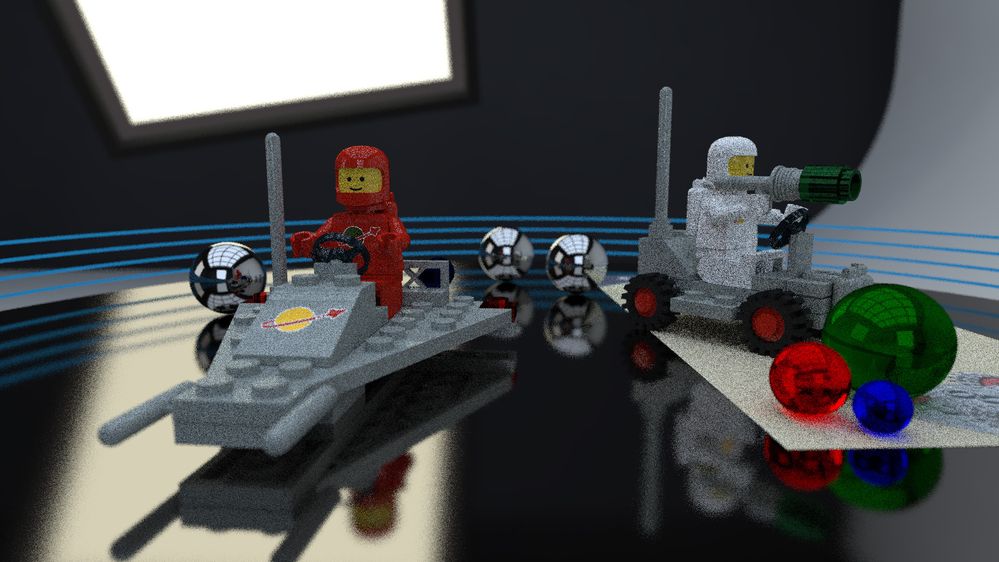

Image 2

Samples per pixel: 10

Render time: ~1.9s

This time the scene is rendered using 10 samples per pixel. The result is an improvement over the first image, but still shows a lot of noise. We are still some way short of being able to say that the image quality is sufficient.

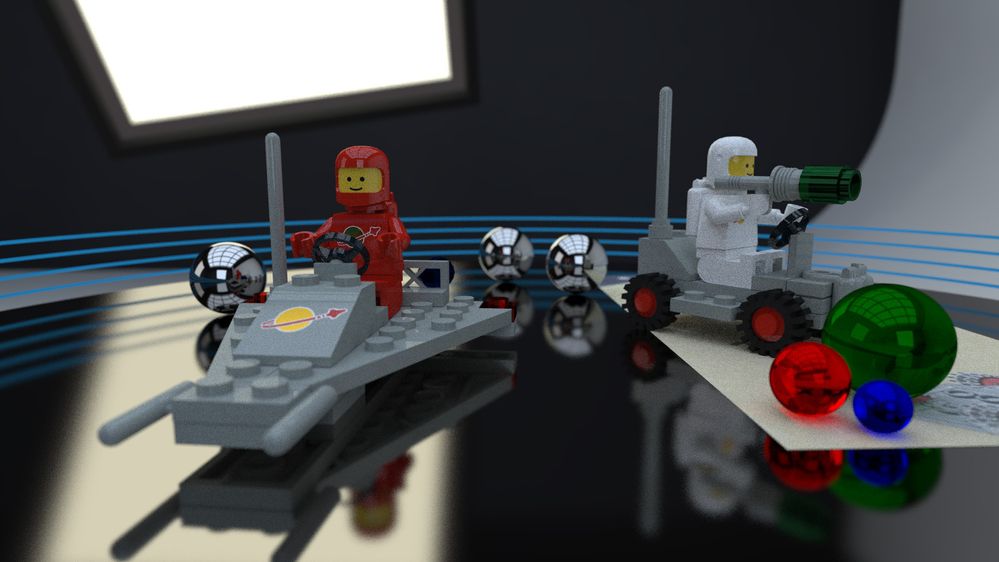

Image 3

Samples per pixel: 100

Render time: ~20.4s

The scene is now rendered at 100 samples per pixel. The image quality is much better. However, it's still not quite as good as we'd perhaps want. The improved quality comes with a cost, too - taking over 20 seconds to reach this level of quality (for a single frame).

Image 4

Samples per pixel: 1000

Render time: ~206.5s

The image quality shown here is improved compared to the examples generated using less samples. It could be argued that the quality is now good enough to be used in a game. Reflections, refractions, depth-of field, global illumination, ambient occlusion, caustics, and other effects can be observed, and all appear to be at a reasonably good quality level. The downside is that this single frame took over 206 seconds to render, or 0.005fps.

Gaming GPUs must be able to generate ray traced imagery at interactive frame rates, which is no small feat. At 60fps, the GPU must render the image (including ray tracing) within approximately 16 milliseconds. This is far from trivial given the current level of technology. Pixar, who employ fundamentally similar ray tracing algorithms to generate movie-quality CGI for their blockbuster movies, have render farms dedicated to the task. Even with a render farm consisting of 24,000 CPU cores, the movie Monsters Inc. took two years to render (with an average frame render time of over 20 hours). That's the reality of ray tracing and how astronomically intensive it can be to generate beautiful images.

Recursion Depth

In addition to the number of samples per pixel, it is also important to have a sufficient number of bounces (recursion depth) for the ray being traced through the scene. Each time the ray hits an object it bounces off in another direction (depending on the material properties of the object hit). Eventually, it will terminate when it crosses a given threshold or hits no further objects, at which point it escapes the environment. The bounced rays provide the colour information needed to realize reflections, refractions, and global illumination amongst other visual elements. The more bounces a ray is permitted to make, the more realistic the pixel colour that is generated. A good example is a ray is being traced through a room full of mirrors which may be bounced around the scene many times. Whilst this will result in great looking reflections it also incurs a large performance hit. To alleviate this issue a ray tracer will typically set a limit on the number of bounces a ray can make - hence providing a means to trade visual quality against computation costs. However, too few bounces and rendering issues may be seen; for example, incomplete reflections, poor global illumination results, and artefacts across object surfaces, where insufficient colour information has been generated to accurately realise the surface material. On the other hand, , too many bounces can result in extreme performance costs with minimal increases in visual quality - after a given number of bounces the difference that additional bounces generate may not even be noticeable.

This incredible technology breakthrough has barely hit home yet, but it's the beginning of an exciting revolution in both the graphics and gaming industry. Battlefield 5, Shadow of the Tomb Raider, and Metro Exodus are set to use RTX technologies for various rendering purposes, so being able to test this for ourselves is only a short while away.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

10-29-2018 12:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

12-28-2018 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

01-07-2019 03:54 PM

I'm curious what are the better practices of stability testing this thing. I am at +90 core clock and +600 mem clock. When I run 3DMark Time Spy Extreme Stress Test, the test goes through fine but sometimes ends up below the 97% mark, at like 96%ish. Temperatures are <80C all the time (top is mostly around 77C) and the card can surely go all the way to 88 if it wanted to. So maybe there's some sort of background notification coming in (got focus mode enabled) or some sort of update check during the test 😕

So my question is.. Is this stable and I can leave it there? Or is this unstable, will affect my gaming stability and frame rates, and I should move it down so I always get 97%+?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

01-11-2019 01:04 AM

SofMaz wrote:

Thank you for the guide. Can you really confirm that 125% power consumption gpu are max 245 Watt? Some reviews messed around 260-300 Watts. Could you show a graphics or table with the power consumption @stock and @ oc to have price / performance comparison ? I would like to overclock but electricity costs and performance increase should go more or less hand in hand.

Gaming load with the increased TDP will top out around 245-250W with the factory BIOS, yes. Peak load may vary depending on the type of load, testing conditions and methodology.

Eclipt wrote:

I just noticed that this guide is a forum post And had a new thread created in the overclocking section.. Anyhow, my issues below:

I'm curious what are the better practices of stability testing this thing. I am at +90 core clock and +600 mem clock. When I run 3DMark Time Spy Extreme Stress Test, the test goes through fine but sometimes ends up below the 97% mark, at like 96%ish. Temperatures are <80C all the time (top is mostly around 77C) and the card can surely go all the way to 88 if it wanted to. So maybe there's some sort of background notification coming in (got focus mode enabled) or some sort of update check during the test 😕

So my question is.. Is this stable and I can leave it there? Or is this unstable, will affect my gaming stability and frame rates, and I should move it down so I always get 97%+?

If the test isn't crashing then you're most likely stable. The pass rate gets lower as the sustained Boost Clock is reduced. In this instance it will be due to temperature. You can settle for this, or experiment with the fan curve in order to reduce temperature. Time Spy Extreme is quite taxing, so it's probable that you will see a higher sustained frequency in games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

01-11-2019 08:57 AM

Silent Scone@ASUS wrote:

If the test isn't crashing then you're most likely stable. The pass rate gets lower as the sustained Boost Clock is reduced. In this instance it will be due to temperature. You can settle for this, or experiment with the fan curve in order to reduce temperature. Time Spy Extreme is quite taxing, so it's probable that you will see a higher sustained frequency in games.

The problem is that in the 3D Mark stress test report, the temperature of the card does not go over 77C. Can the <97% still be due to temperature?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

01-12-2019 02:00 PM

Eclipt wrote:

The problem is that in the 3D Mark stress test report, the temperature of the card does not go over 77C. Can the <97% still be due to temperature?

Yes, as the GPU temperature increases beyond 60C GPU Boost 4.0 will drop to a lower clock bin at staged intervals until the temperature settles.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

01-18-2019 11:35 PM

I just squired a pair of Strix 2080Ti o11G cards. As stated pretty much everywhere power limits are the deciding factors with these high current and HOT cards. The heat sink does a good job at removing the heat but they also cover 3 slots. I normally OC cards using GPU tweak with the first steps being sliding voltage and power to max then go to adjusting after. This actually has an adverse effect with the power limit on these and you wont be able to get as good of an OC. Both samples I have I went to +150 on the core with the voltage left as is keeping the power limit down.I increased a little running timespy in between to check for stability I could get up to +175 with the voltage up but that put me directly into power limit and resulted in more heat and less performance. Where I ended up at was +160 on the core, +500 on memory (got this higher but scores dropped) and voltage slider at +50%. This got me a stable OC with the least amount of voltage running right on the hairy edge of the power limit only hitting it very briefly during benchmarks nonce or twice but mostly peaking around 123%-124%. So my advice FWIW is to max out power limit slider then increase core until it becomes unstable then move the voltage up a little at a time. Like I said I went to +150 without moving the voltage at all.

- All my games crash after a few minutes of playing in NVIDIA Graphics Cards

- Overclock on Demand? in NVIDIA Graphics Cards

- [Motherboard / Graphics Card] How to Improve Game Performance (FPS) in FAQs (GPU & PSU)

- [Graphic Card] How to Update ASUS Graphics Card Drivers? in FAQs (GPU & PSU)

- [Graphic Card] ASUS GPU Tweak III Introduction in FAQs (GPU & PSU)